Greetings everyone!

Today, I'm thrilled to share the details of a recent project we've undertaken Appwrite hackathon. We've built an app that creates variations of a room based on a provided room picture. The app then detects objects in the generated images and refers to similar products on Amazon for the user. Our app utilizes the potent combination of Appwrite for the backend, ControlNet and M-LSD Lines for creating room variations, Eden API for detecting objects in the generated picture and finding similar products on Amazon using Rainforest API. We are calling it RoomExpert

Team members

The Concept: An Overview

At the core of our application, we aimed to build a system that could take a picture of a room and create imaginative and diverse variations. We wanted the user to not just visualize these alterations, but also find similar furnishing items easily, should they wish to bring these designs to life. And hence, our application was born.

Crafting the UI: T3 Stack

For crafting the UI, we used the T3 stack, which provided us with a simplified way to create a modular and scalable front-end architecture. The modular design pattern of the T3 Stack allows us to separate different parts of our system effectively. We encapsulated related functions, states, and events into separate modules, improving maintainability and scalability.

Deployment was the final, yet crucial step in our application's development journey. Vercel, a cloud platform for static sites and Serverless Functions, provided a straightforward deployment solution.

Architecting the Backend: Appwrite

Appwrite, an end-to-end backend server for web, mobile, and Flutter developers, came to our rescue when we were setting up our backend. It allowed us to take care of multiple backend tasks seamlessly:

Authentication: We utilized Appwrite's in-built authentication system, allowing for user sign-in, sign-up, and session management. This eliminated the need to write extensive security code ourselves.

Database: We used Appwrite's database to store user information and data about the room variations, thereby ensuring that we had a robust and secure data management system.

Storage: Appwrite's storage capabilities were used to save images of rooms and their variations, providing us with an organized and reliable method of managing files.

Functions: We used app write functions to run server code and generate variations of the room depending on user-selected options. I love them because we could vary our execution time according to our requirements under function settings.

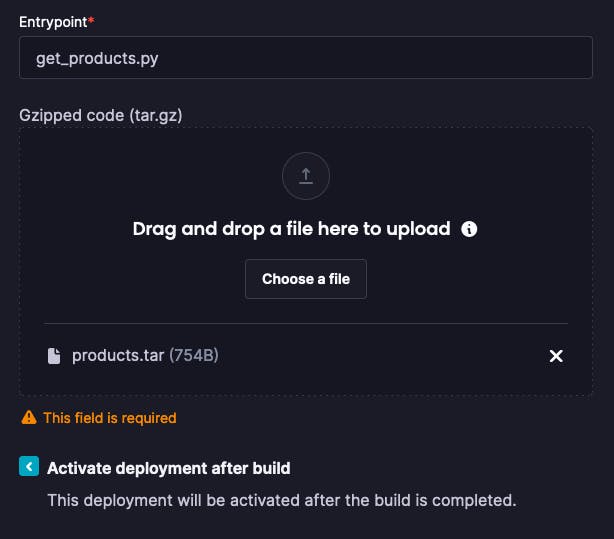

A small issue with Appwrite Functions

We wanted to leverage Appwrite's cloud functions, enabling us to execute server-side logic and perform resource-intensive tasks in the background. This would provide significant computational efficiency.

When trying to upload it manually, it thinks that the file field is empty and throws a warning.

Nonetheless, the CLI works just fine. Make sure you follow their docs and first setup your appwrite project and then execute

appwrite init function

Which created a certain folder structure that is required before you can successfully deploy the function to appwrite.

If you are using Nodejs as your runtime environment, note that appwrite will receive payload as JSON string. To read the keys first parse the JSON.

let payload =

req.payload ||

"No payload provided. Add custom data when executing function.";

//This will return undefined ❌

console.log(payload.message)

//Parse the json first

payload = JSON.parse(payload);

//This will work ✅

console.log(payload.message)

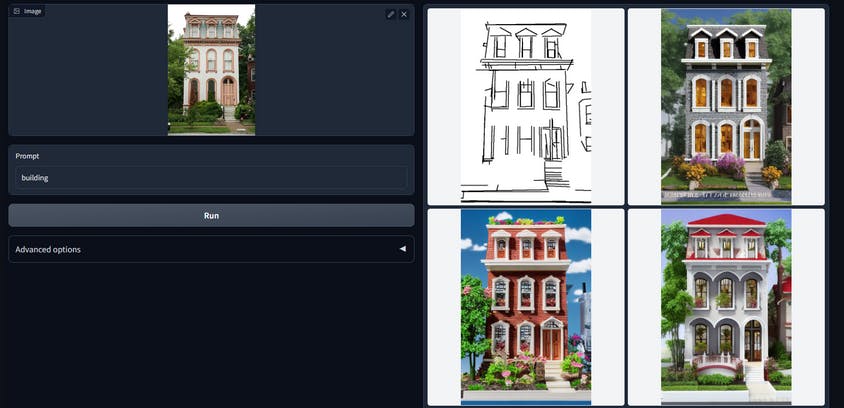

Room Variations: ControlNet with M-LSD Lines

Creating room variations was the most exciting aspect of our project. We used a Machine Learning model called ControlNet, which helped us generate a plethora of room variations. ControlNet provided a controller mechanism to extract spatial information and adjust the changes according to the user's requirements. This was further aided by M-LSD Lines, which helped in detecting lines in an image. Together, they gave our users the power to recreate their rooms with remarkable detail and creativity.

ControlNet is a type of Convolutional Neural Network (CNN) architecture with a unique approach to spatial control. Unlike traditional CNNs that propagate information forward through layers, ControlNet can propagate spatial information both forwards and backwards. This means that ControlNet is not just processing the image and generating an output, but it's also able to control the transformation based on user input or specific design requirements. This gives us a remarkable degree of flexibility when generating room variations.

To improve the precision of our room variation generation, we paired ControlNet with M-LSD (Multi-Level Line Segment Detector). Line detection is a fundamental task in computer vision and is particularly useful in our case for understanding the room's layout.

M-LSD offers an advantage over traditional line segment detectors by working across multiple scales. This means it can detect both small and large lines in an image, which might correspond to various objects or architectural features in a room. The lines detected by M-LSD serve as additional spatial information for ControlNet, helping it to better understand the room's layout and accurately generate variations.

In essence, the combination of ControlNet and M-LSD Lines allows us to extract key spatial information from an image, manipulate it according to user preferences, and create a diverse array of room designs. It's a demonstration of the power of deep learning, delivering a unique, personalized user experience.

For the sake of this project, we are using public model hosted on Replicate

Object Detection & Referrals: Eden AI and Rainforest Search API

After generating room variations and identifying objects such as lamps, beds, and chairs, we needed a way to connect users to similar products.

We are using Eden AI object detection and then figuring out what their dominant color is. We do that to further pass that information to Rainforest Search API to find similar products on Amazon and refer them to the users.

Public Repo

Our code can be found at this Github repo.

The Final Picture

The integration of all these technologies allowed us to build an application that not only helped users envision their rooms differently but also offered them an efficient way to make those visions a reality. It was an enriching experience participating in the hackathon, and we believe our application serves as a testament to the fascinating possibilities that emerge when diverse technologies are melded together.

In closing, I'd like to encourage everyone to keep experimenting, keep learning, and keep creating. The journey of technology is a thrilling ride, and every bit of exploration enriches it further.

Thank you Hashnode and Appwrite for hosting this Hackathon 🌸